Architectural Overview

This page will introduce you to the basic software architecture of the Tekkotsu framework. Generally, most users link their code into our executable and run within the architecture as described below. However, Tekkotsu can also be used as a library, where your own code defines the architecture and sections of Tekkotsu are only called "as needed". Jump to the Tool Development section to see how this style is employed.

The following description of the Tekkotsu executable's architecture begins at a "high-level" where hopefully users will spend the majority of their development time, and gradually descends into the inner workings. The very lowest levels, where the framework directly interacts with the host operating system and hardware devices, is covered on the Porting page.

Information regarding the runtime user interface is covered on the TekkotsuMon (end user GUI) and Execution pages (hardware abstraction layer configuration and command line).

Introduction

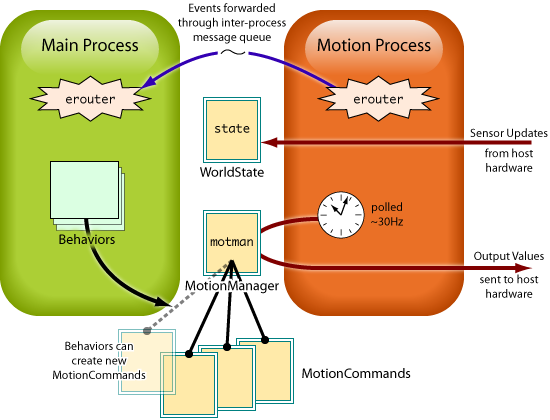

Tekkotsu is divided into two key processes. The "Motion" process runs in real-time, meaning all code must operate in short, regular intervals, so that it can guarantee reliable control of actuators and responsiveness to sensors. However, programming complex behaviors under this constraint is very difficult and often inefficient. Hence the second key process, "Main", which provides a normal deliberative programming environment with few restrictions or expectations of users' code.

One of the major features of the Tekkotsu framework is the use of shared objects known as MotionCommmands, which provide high bandwidth and intuitive interaction between these two processes. Each MotionCommand implements some real-time task, such as walking or manipulating an object. The Motion process will poll (at high frequency) all active MotionCommands to track the desired joint values over time. Meanwhile, the Main process can ruminate at length without disturbing the flow of data to the system. If Main eventually decides a change in course is needed, it can grab the MotionCommand of interest, directly call various specialized member functions to reconfigure its current and future maneuvers, and then turn it loose again. By operating on MotionCommands kept in shared memory, there is zero latency between making a decision and enacting its execution.

Note: The term "process" is used loosely. Depending on runtime configuration, Main and Motion can be either threads within a single process, or separate full-fledged OS processes via fork(). The latter mode is somewhat more restrictive due to requiring explicit declaration of shared regions, but by doing so, yields less chance for unexpected thread race conditions. See the Multiprocess configuration setting on the Execution page for more information.

The "Main" Process

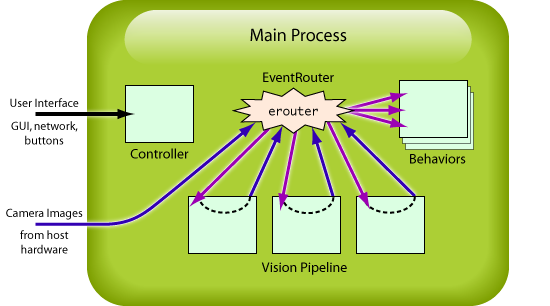

The Main process hosts most of your own code, as well as many of the framework's critical components, such as the event router, the Controller, and the vision system.

Main process: blue lines indicate calls to EventRouter::postEvent(), purple lines are events distributed to EventListener::processEvent(), light green boxes are all various subclasses of BehaviorBase.

Behaviors

Behaviors are the core unit of programming in Tekkotsu. The DoStart() function will be called when it is time to set things up, such as subscribing to event generators or instantiating new MotionCommands for later use. DoStop() is your signal to shut down -- deregister event listeners, free data structures, etc. Behaviors do the majority of their processing in an event-based manner, as described in the next section. Only one event is ever being processed at a time, so behaviors do not need to worry about mutual exclusion vs. other behaviors. ("cooperative" multitasking, unless they spawn their own threads...)

Our StartupBehavior is similar to the 'init' process under linux — if you want to control what is launched when Tekkotsu has finished loading, edit or replace the behavior which is returned by this function. (The function is not defined by the framework, you must provide it in one of your project files to successfully link the tekkotsu executable.) For more information on the startup procedure, see the Startup/Initialization section below.

To help get your feet wet, we have two tutorials: Writing Behaviors and Dr. Touretzky's Beginner's Tutorial. Quick-start template files are also available in the project/templates directory. For a list of included behaviors, see the list of controls and the subdirectories of Behaviors.

Events

EventRouter is a class which manages distribution of events to listeners. It is globally accessible as erouter, so that you can register to listen for events or throw events from anywhere in the code. EventRouter also manages timers, so you can request timeouts or monitor conditions at a slower pace instead of every sensor update. Timer notification uses the same event interface as all other events.

Events are defined by a 3-tuple consisting of a generator, a source, and a type. Source IDs are specific to whichever generator created it. For instance, source ID 2 from the button generator refers to a specific button, but if it was from a vision object generator it would signal that a particular object was detected. The type of the event is either activation, status, or deactivation. Typically, an activate happens the first time in a series (such as button-down), status occurs whenever there is a change in value (e.g. if the button is pressure sensitive), and deactivation is sent after the last status event (such as button-up).

Thus, you can implement a behavior which responds for as long as a stimulus is active either by setting things up (e.g. request a timer, create a MotionCommand) on the activate event and halting them on the deactivate event, or by doing a bit of processing on each status event. However, not all generators provide status events — see the EventBase::EventGeneratorID_t enumeration for details on each generator and what kinds of events it provides.

You can subscribe to events via the EventRouter at different levels of specificity: either all events from a given generator (such as all button events), or all events of a specific generator and source (such as all chin button events), or only certain types of events from a specific source (such as only deactivation (button-up) of the chin button). The activate and deactivate events are not generally duplicated and also sent as status events, so be careful if you want to receive all events vs. only status events.

Generators can also throw a subclass of the EventBase to hold additional information. For example, the vision object detectors do this to provide the (x,y) location of objects in the visual field. (VisionObjectEvent)

The EventRouter internally handles mutual exclusion if multiple threads post events at the same time. (postEvent() will block until all subscribed listeners have seen both previously enqueued events as well as the current event.)

Controls

One important behavior is the Controller — this manages a menu driven interface which allows you to activate, deactivate, and modify behaviors during run time. The Controller can be given input through the Aibo's buttons, the console, or a GUI interface. The Controller will trap button events, and automatically activates itself any time the EmergencyStopMC activates. It traps the events so you can control behaviors via the Controller without inadvertently sending events to currently running behaviors.

Each item in the menu system inherits from the ControlBase object. The base class provides virtual functions which will be called for you when the appropriate user interactions occur. Through C++'s multiple inheritance, your class can be both a Control and a Behavior or EventListener.

The most commonly used control is the BehaviorSwitchControl, which is used to activate or deactivate an associated behavior. For a list of included controls, see the TekkotsuMon Tutorial or browse the Controls directory in our CVS repository.

Vision

Basic image processing is done in the Vision Pipeline, which is designed to perform a set of relatively generic transformations on each image taken by the camera. These operations are expected to be fast, and have their results reused in several different behaviors or further pipeline stages.

Tekkotsu provides a simple blob heuristic which attempts to identify ball-shaped blobs of particular colors. It subscribes to the region generator stage of the pipeline, which provides connected components of the run-length encoding stage, which is itself processing the results of the color segmentation stage. (These three pipeline stages are from the CMVision package.) Note that pipeline stages are not necessarily pixel-based — for instance, the region generator produces a list of region stats, the JPEG and PNG generators provide compressed images, etc.

More application specific, in-depth image processing is provided by the DualCoding package. This system is designed for analysis of an image, where many processing steps are needed to extract features or relationships of interest. An example of this usage is parsing a tic-tac-toe board, where lines are extracted, analyzed, and board positions are classified. (This task is an assignment in the Cognitive Robotics course.) Further, DualCoding provides routines for combining multiple images into overhead maps of the environment (MapBuilder), and navigation using these maps (Pilot).

The two approaches are complementary — the DualCoding package can be used to create Vision Pipeline stages if appropriate. The pipeline is applicable when you want to perform processing on each of a sequence of images, and the results will be shared by multiple (or unknown) listeners. DualCoding is used by itself (outside the pipeline) when you want to process a single particular frame of known interest, or the processing is not generic enough to be useful to other behaviors.

The "Motion" Process

The Motion process provides real-time control of the system. This is necessary for a physical system with moving parts, where irregular updates can cause vibrations and jerky motion, producing imprecise control and unnecessary wear and tear.

WorldState

An EventBase::sensorEGID event is sent whenever WorldState is updated with new sensor readings. These sensors include joint positions, joint torques, button status, power status, as well as IR distance, accelerometer and temperature readings (when available on host hardware!). WorldState is globally instantiated in a shared memory region as state, so it is always directly accessible in either Main or Motion.

Unsensed state, such as the LED values, PID settings, and ear positions are also stored here, and are updated by the Motion process to reflect the last values given to the system.

Although it is invisible to the end user, there are actually multiple instances of WorldState. Anytime Tekkotsu is going to be running behavior code, it sets state to point to the most recently updated WorldState. This way Motion can continue updating WorldState in a real time manner for use by motion commands, without causing mutual exclusion problems from changing sensor values in the middle of a behavior operation. The take-home message is that sensor values are not volatile, and will not change asynchronously while a behavior or motion command is running.

MotionManager

MotionManager provides simultaneous execution of one or more motion primitives. This allows for independent control of body parts, e.g., head and legs can be executing separate motion patterns, with priority arbitration and motion blending for conflict resolution. Motion primitives are shared memory regions based on MotionCommand (next section).

The MotionManager handles the grunt work of making sure both processes have mutually exclusive access to the MotionCommands. MotionManager is globally instantiated as motman, which will point to the shared memory region in both processes.

MotionCommands

MotionCommands are subclassed to provide their functionality. They should be fairly simple, possibly reactive control systems. The current state of the world, aptly named WorldState, is itself a shared memory region, and is therefore always available to MotionCommands, regardless of whether they are currently running in Main or Motion.

The MotionManager also automatically provides mutually exclusive access to the MotionCommands so you don't have worry about potential conflicts if the Main process is modifying a MotionCommand while the Motion process is requesting a joint update.

However, there is one important drawback to making MotionCommands shared objects - you must be very careful in your usage of pointers. Memory allocated in one process will not be available in another process. Also, the same region may have different base addresses in each process, which even invalidates pointers to internal data structures. (In practice, in non-memory protection mode, they do share the same address, but it would be safer not to rely on this behavior.)

A good example of a MotionCommand is EmergencyStopMC, which listens for a double-tap on the back button of the Aibo. When this occurs, it reads the current positions of all joints and holds the joints at these values. It continues to monitor forces on the joints and will give with moderate pressure. Besides giving you a valuable method to pause the robot if starts going haywire, it also lets you mold postures. EmergencyStopMC itself inherits from PostureMC, which provides significant functionality for setting postures, either through inheritance or programmatic control from other modules.

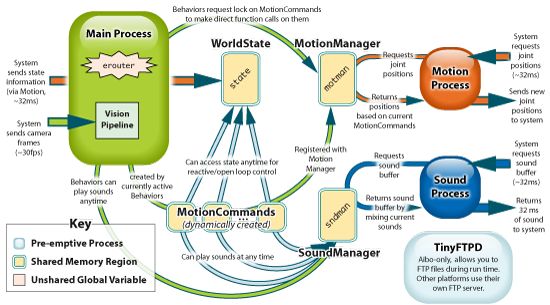

Complete Overview

Tekkotsu also makes use of a few other helper processes, however you will probably not need to directly interact with them. These are shown in this final diagram, giving the broadest overview of all the pieces working together.

The Sound process is very similar to the Motion process, with real time constraints and a shared memory object for interaction (SoundManager). On unix-based platforms, all of the "system" interactions are through a fourth process, the hardware abstraction layer, which is further described on the Execution and Configuration page.

Standards and Units

The standard reference frame used throughout the code to refer to left, right, etc., are relative to the robot's point of view. Degrees are positive counter-clockwise, with the robot facing 0º.

Radians are used for any angular values, distances are in millimeters and time in milliseconds. For more specific information on units, see the documentation for WorldState, which contains the units for each of the sensors.

Ideally, the code style used is:

- Java naming style:

- classes camelCase with first letter capitalized: BigFooThing

- variables camelCase starting with lower case: indexOfFooThing

- constants should be all caps with underscores: NUM_OF_FOOS

(members of RobotInfo are a notable and unfortunate historical exception) - namespaces all lowercase with underscores: image_utils

- typedefs are either lower case with _t suffix (index_t, generally for primitive plain-old-data (POD) types) or capitalized camelCase (i.e. class-style, generally for class types such as templates with a particular set of parameters)

- Array/Collection names are plural

- Tabs for indentation, spaces for alignment

This is always a matter of religious debate, but tabs mean "indent", and spaces do not. Tabs allow each user to set the editor's indentation widths as they wish, and eliminates the possibility of sloppy half-indentation. Editors which mix spaces and tabs are assuming a certain tab width and inflict that choice on everyone else. Emacs users, simply set your tab-width to match your indentation-width and things will go smoothly. ;) - Inline brackets; e.g:

if(a==b) {

cout << "Hello World" << endl;

}

not:

if(a==b)

{

cout << "Hello World" << endl;

}

Startup/Initialization

So, now that you know what the pieces are, here is how the Tekkotsu executable boots up:

- The system constructs the processes. On the Aibo, these processes are called OObjects, an OPEN-R SDK defined structure. Unix-based platforms use our own class called Process. If Multiprocess is set to true, Process runs via a fork(), otherwise it uses a pthread_create() with some thread-specific keys.

- The processes send messages to each other to set up shared memory regions. (Main creates everything except the MotionManager, which Motion creates, and the SoundManager, which SoundPlay creates.)

- Main calls DoStart() on the reference returned by ProjectInterface::startupBehavior(). The implementation of StartupBehavior provided in the template project directory does the following:

- Creates an EmergencyStopMC (a MotionCommand which holds the robot still)

- Creates and initializes the Controller, sets up the menu hierarchy

- On supporting platforms (e.g. Aibo), fades in power to the joints over the course of a second (this keeps the joints from jerking suddenly as it starts up)

- Robot runs until shutdown signal (e.g. power button, controller menu item).

On the Aibo, pushing the "pause" button on the chest simultaneously cuts power to the motors and sends a message to the Main process, which then requests a system shutdown. - Shared memory regions are dereferenced and released.

- Each process's destructor is called.

- The Tekkotsu executable exits.